AI is not a ‘silver bullet’ against cyber attacks

Simply sign up to the Artificial intelligence myFT Digest -- delivered directly to your inbox.

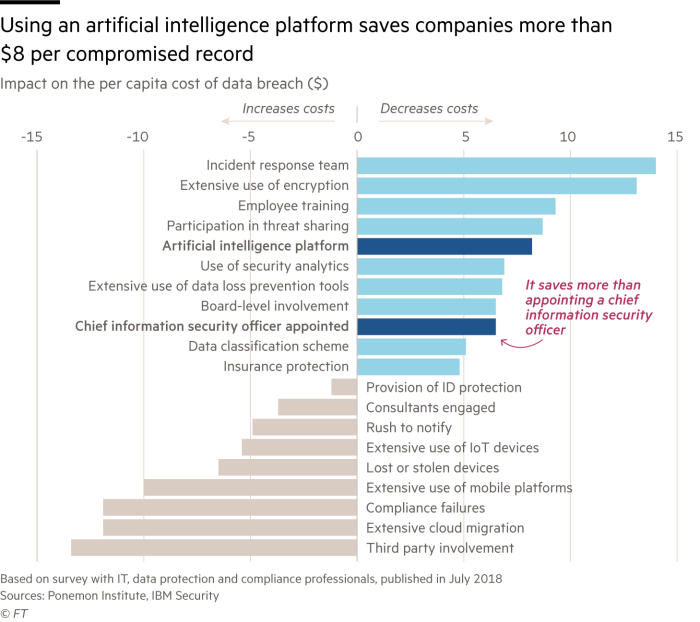

Artificial intelligence is emerging as a useful cyber security tool, but experts are warning companies not to view the technology as a “silver bullet”.

Many elements of cyber defence — particularly monitoring large amounts of data — can be better handled by machines than humans.

“It’s not so much that AI does it better, but that it works unendingly and consistently without getting tired,” says Daniel Miessler, director of advisory services at IOActive, a cyber security consultancy. At present, most security teams are only looking at “a tiny fraction — less than 1 per cent” of the data their organisations are producing, he says. In a large organisation, analysing the rest is such a monumental task that it can quickly overwhelm humans.

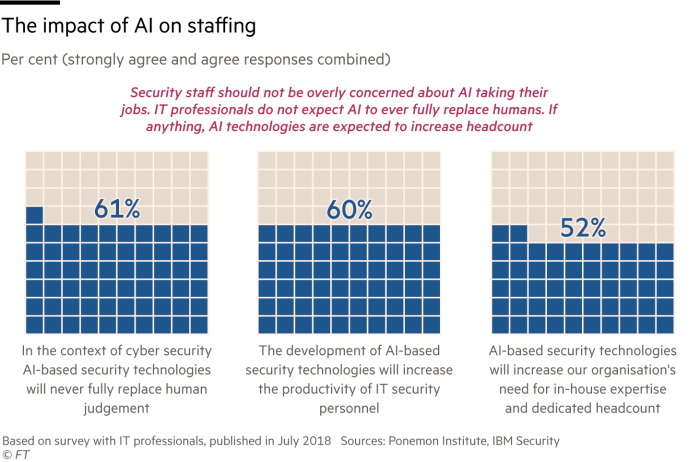

Moreover, cyber security professionals are in short supply and expensive.

“Data scientists who can do this work . . . command salaries of up to $600,000,” says Chris Moyer, vice-president and general manager of security at DXC Technology. “There is a constant battle for talent. We need technology that gives us coverage with fewer people.”

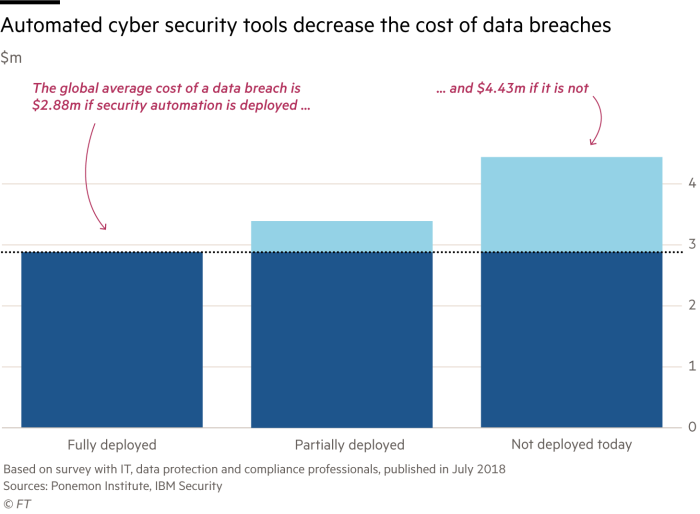

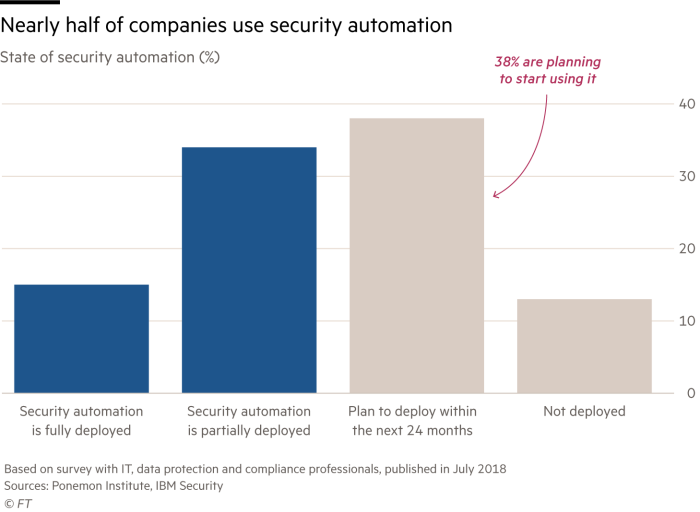

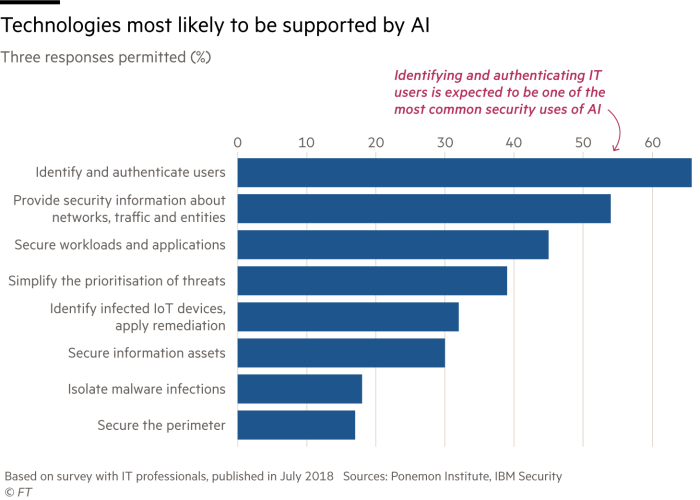

About half of companies surveyed in an IBM and Ponemon Institute study this year are deploying some kind of security automation, with a further 38 per cent planning to deploy a system within the next year. In recent years a host of start-ups, such as Endgame, Obsidian Security, PerimeterX, Respond Software and Versive, have emerged to offer AI security tools.

There is a danger, however, that AI is being overhyped as a solution for cyber security. The technology has its limitations, says Zulfikar Ramzan, chief technology officer at RSA, a network security company. “Where I’m sceptical is whether we can treat AI as a panacea.”

AI techniques were never designed to work in adversarial environments, Mr Ramzan says. “They have been successful in games such as chess and Go and on problems where the rules are very well defined and deterministic.

“But in cyber security the rules no longer apply. ‘Threat actors’ constantly adapt their technique in the hunt for ways to circumvent systems. In those environments, AI is unsuitable because it cannot adapt quickly enough.”

There is also some risk associated with AI in organisations, because analysing data requires that it is kept in one place. “That could be a point of failure,” Mr Ramzan says.

Rather than destroying or stealing data, an attacker might make subtle, almost unnoticeable changes to the system that would undermine the way the AI algorithm works.

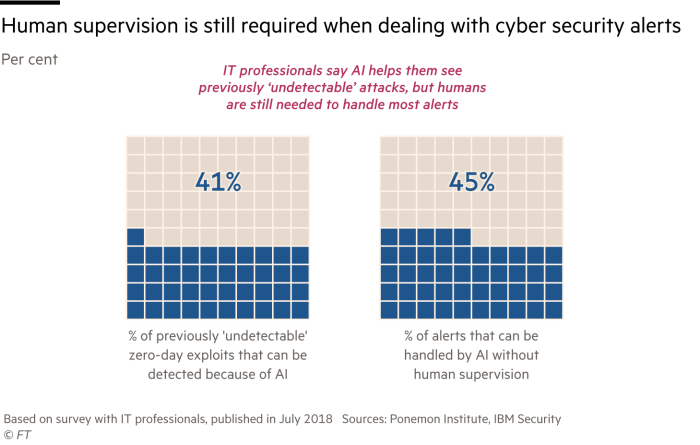

This is why, security analysts say, it is critical to understand how these systems work and not treat them just as black boxes or magic.

At the moment AI is fantastic at notifying users they have been compromised after the event, says Jason Hart, chief technology officer of data protection at Gemalto, a digital security company. “What we want it to do is identify when something suspicious happens, apply the appropriate security controls to mitigate the risk, then report back that it has noticed a potential attack, stopped it and protected the data.”

Mr Hart predicts that machine learning software that could do this automatically is three to four years away.

Hackers, meanwhile, will also be using AI to boost their attack capabilities. AI could, for example, be used to gather data for “spear phishing” campaigns, where individuals are targeted with highly personalised fake emails.

At present there is little evidence that online criminals are making extensive use of AI, says Mr Ramzan. “Hackers currently go for the minimum they need to do to compromise a system. There are many easier ways that don’t involve fancy AI techniques.” Nation states are most likely to be the first sources of AI-based attacks, he believes.

Mr Moyer warns, however, that in the corporate world “there is not a culture of sharing information on data security”. The online fraudsters are often quicker to distribute new tools and techniques among themselves. So once AI hacking tools are created, they can spread quickly.

This raises the prospect of cyber security becoming a battle of the machines. For now Mr Miessler believes that humans will have to stay involved in the process but their role will diminish as AI becomes more powerful.

The breakthrough will be with the arrival of artificial general intelligence (AGI), he says. “This is when software will be able not just to gather and examine data, but make its own decision to attack something.

“If and when AGI happens, we could potentially take the human out of the loop — but, most experts agree, that is quite a long way off.”

Comments